We’ve recently added a few tasty new features to Bitbucket Pipelines that we wanted to share with you before the holiday break. Here’s what Bitbucket Santa has put under the tree for our good Pipelines customers this December. (Naughty customers should close their browser window now!)

Test reporting with zero configuration

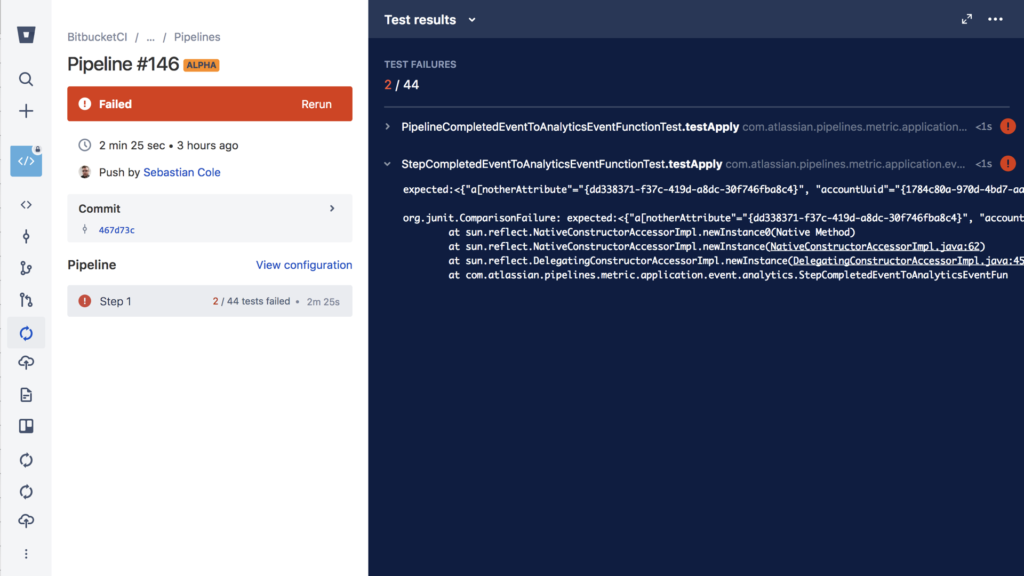

We’ve added test reporting to Bitbucket Pipelines, a highly requested feature from our customers. If you’re already generating test reports as part of their build process, you should already be seeing them picked up by Pipelines. They’ll appear in your build results and soon in notifications too.

As well as making your builds blazingly fast, our goal with Pipelines is to take the pain out of configuring CI/CD builds. To that end, we are always looking for ways to reduce configuration options that bloat and complicate things.

All the other build tools we’ve seen require you to configure exactly where you build stores test reports. Whenever you add a new module to your project, you might need to go back and update this configuration, or remember to set it when you configure a new project.

Unlike these other tools, test reporting in Pipelines requires zero configuration. We automatically scan your build directory up to 3 levels deep for directories matching “test-reports”, “test-results” or similar, then parse the JUnit-style XML files we find there.

This common format is supported and automatically generated by many tools (we’ve seen almost 10% of Pipelines builds pick up test reports automatically), and for tools that don’t automatically generate reports, we have instructions in our documentation.

A few improvements to test reporting are still in progress, like showing steps with successful tests, but we’re happy to make our first iteration available to you now.

Run Docker containers and docker-compose files

We’re also excited to announce that Pipelines now offers complete hosted Docker support, allowing you to build, run and test your Docker-based services in any configuration that doesn’t require privileged mode on the host. This includes using docker-compose to start a set of microservices up for testing on Pipelines.

Back in April 2017, we introduced the ability to build, tag and push Docker images from Pipelines up to your preferred registry. However, due to the security model of the Docker daemons in our shared infrastructure, we couldn’t offer the ability to run Docker containers and related tasks.

Thanks to some innovative work by our engineering team, we’ve extended our Docker authentication plugin to support all Docker commands and still prevent privileged commands being run.

Because we now allow starting arbitrary containers via docker run, we also now enforce a 1GB memory limit on Docker usage on Pipelines that was previously unlimited. You can read more about this change and how it might affect you in our infrastructure changes documentation.

Large builds: double resources for double cost

Ever since we launched Pipelines, we’ve had demand to support ever larger builds. We started offering 2GB of memory by default, and increased that to 4GB shortly after launch. Allowing builds with even more memory is a common request.

But one does not simply increase the resources allocated to customers on a hosted build service. Our default allocation factors in our hosting costs, typical customer needs, and maintaining a competitive price. To offer configuration options for larger builds, our scheduling and auto-scaling systems have to handle builds of varying sizes while continuing to keep queue times to an absolute minimum. Fortunately, the latter is what we’ve been able to achieve.

I’m pleased to announce that Pipelines now offers 8GB of memory, and similarly doubled resources (CPU, network, etc), as a new option for customers. Simply configure size: 2x in your Pipelines YAML file, either globally or on a specific step, to take advantage of this.

Steps that use double resources will consume twice the number of build minutes from your monthly allocation, effectively costing you twice as much. So we recommend configuring large builds only where you need additional memory or speed.

options:

size: 2x # all steps in this repo get 8GB memory

pipelines:

default:

- step:

size: 2x # or configure at step level

script:

- ...

Our early stats show that large builds have decreased the average build time for customers that use them, due to decreased CPU contention on our hosts, but your results will depend on the specific tasks your build performs.

We’re sure the optional extra memory and CPU allocation will prove extremely useful to the growing number of professional teams that are relying on Bitbucket Pipelines for all their CI/CD needs.

Happy holidays!

We hope you enjoy these great new additions to Bitbucket, and thanks to the thousands of new customers that joined us this year.

Please let us know how Bitbucket and/or Pipelines is helping you and your team to build great software. We’re always excited to hear your stories.